Claude Code Demystified: Whirring, Skidaddling, Flibbertigibetting

There’s a certain magic to watching Claude Code work. You type a request, hit enter, and suddenly your codebase is being read, analyzed, and modified with the accuracy of an experienced developer. But what’s actually happening when you issue that command?

Using a tool called Claude Trace, I intercepted the requests and responses flowing between Claude Code and the underlying LLM to see exactly what’s going on. What I found was an elegant system of prompts, tools, and coordination mechanisms that transforms a simple natural language request into a series of precise code modifications.

Let’s dig in.

The Setup: A Simple CLI To-Do App

For this exploration, I started with a basic Python CLI to-do list tracker. Nothing fancy: you can add tasks, list them, mark them complete, delete them. The code lives across three files:

todo/

cli.py - Command-line interface and argument parsing

models.py - Task dataclass model

storage.py - JSON file persistence layerFrom this basic scaffolding my feature request to Claude Code was straightforward: Add priority levels (low, medium, high) and let users sort/filter by priority.

Here’s the start of our conversational context passed to the LLM:

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "<system-reminder>As you answer the user's questions, you can use the following context:# claudeMd …</system-reminder>\n" # CLAUDE.md INJECTION

},

{

"type": "text",

"text": "Add priority levels (low, medium, high) and let users sort/filter by priority", # MY USER PROMPT

"cache_control": {

"type": "ephemeral"

}

}

]

}

],

"system": [

{

"type": "text",

"text": "You are Claude Code, Anthropic's official CLI for Claude.",

"cache_control": {

"type": "ephemeral"

}

},

{

"type": "text",

"text": "\nYou are an interactive CLI tool that helps users ....", # REST OF CLAUDE SYSTEM PROMPT

"cache_control": {

"type": "ephemeral"

}

}

]

}What’s going on here?

The System Prompt

The first thing Claude Code does is establish context. Before your request even reaches the LLM, a substantial system prompt gets injected that defines Claude Code’s entire personality and operational guidelines.

Here’s the opening:

# Claude Code System Prompt

You are an interactive CLI tool that helps users with software engineering tasks.

Use the instructions below and the tools available to you to assist the user.But it goes much deeper than that. The system prompt includes:

Security guardrails:

## Security Guidelines

IMPORTANT: You must NEVER generate or guess URLs for the user unless you are

confident that the URLs are for helping the user with programming.Tone and style directives:

## Tone and Style

- Only use emojis if the user explicitly requests it

- Output will be displayed on a command line interface—keep responses short and concise

- Use Github-flavored markdown (CommonMark specification)

- NEVER create files unless absolutely necessary—prefer editing existing filesAnd crucially, anti-sycophancy guidelines:

## Professional Objectivity

Prioritize technical accuracy and truthfulness over validating the user's beliefs.

Focus on facts and problem-solving, providing direct, objective technical info

without unnecessary superlatives, praise, or emotional validation.

- Honestly apply the same rigorous standards to all ideas

- Disagree when necessary, even if it's not what the user wants to hearThink of the system prompt as Claude Code’s operating manual. It shapes how every interaction unfolds.

The CLAUDE.md Injection

Here’s something that surprised me: your CLAUDE.md file is injected directly into the conversation context.

When I looked at the trace, I saw this wrapping:

# <system-reminder>: claudeMd

Codebase and user instructions are shown below. Be sure to adhere to these

instructions. IMPORTANT: These instructions OVERRIDE any default behavior

and you MUST follow them exactly as written.

## Contents of /Users/mihaileric/Documents/miscellaneous/CLAUDE.md:

[Your CLAUDE.md contents here]Notice that <system-reminder> tag. You’ll see these throughout Claude Code’s internals. They’re essentially attention amplifiers. It’s a clever approach: since LLM instructions are fundamentally suggestions, not commands, these formatting conventions help ensure critical directives don’t get lost in the noise.

The Tool Arsenal

Claude Code doesn’t just “think” about your code. It has a robust toolkit to actually interact with it. The trace revealed a comprehensive set of built-in tools:

## Tools by Category

## File operations

- Read — read file contents

- Edit — in-place string replacements

- Write — write or overwrite file contents

- NotebookEdit — edit, insert, or delete Jupyter notebook cells

## Search & discovery

- Glob — find files by path pattern

- Grep — search inside files using regex

- LSP — code intelligence (definitions, references, symbols, call hierarchy)

- ToolSearch — discover and load deferred tools

## Execution

- Bash — run shell commands

## Web

- WebFetch — fetch and analyze web page content

- WebSearch — search the public web

## Workflow & coordination

- Task — launch autonomous sub-agents

- TaskOutput — retrieve task output or status

- TaskStop — stop running background tasks

- EnterPlanMode — enter planning workflow

- ExitPlanMode — exit planning and request approval

- AskUserQuestion — ask structured clarification questions

- TaskCreate — create tasks

- TaskGet — retrieve task details

- TaskUpdate — update task status, metadata, or dependencies

- TaskList — list all tasks

- Skill — invoke named skills or slash commandsThese built-ins are provided to the LLM as function definitions with parameters. When the model decides it needs to read a file, it doesn’t output text saying I would read the file. It actually emits a structured tool call that the host system executes.

{

"type": "tool_use",

"name": "Read",

"input": {

"file_path": "/Users/mihaileric/Documents/code/claude-code-demystified/todo/models.py"

}

}And here’s where MCP fits in: after the built-in tools, any MCP servers you’ve configured also get added to the tool list. In my trace, I saw tools prefixed with mcp_context7_ and mcp_linear_. These are additional capabilities the model can invoke just like built-ins.

The Read-First Strategy

Before Claude Code writes a single line of code, it reads. When I issued my priority feature request, the first thing the model did was emit a series of Read tool calls:

Read: todo/models.py

Read: todo/cli.py

Read: todo/storage.pyEach read tool call returned the actual file contents, which then got injected back into the conversation context. Only after the model had a complete picture of the existing codebase did it start planning modifications.

This is a deliberate design choice, reflected in the system prompt’s best practices:

### Best Practices

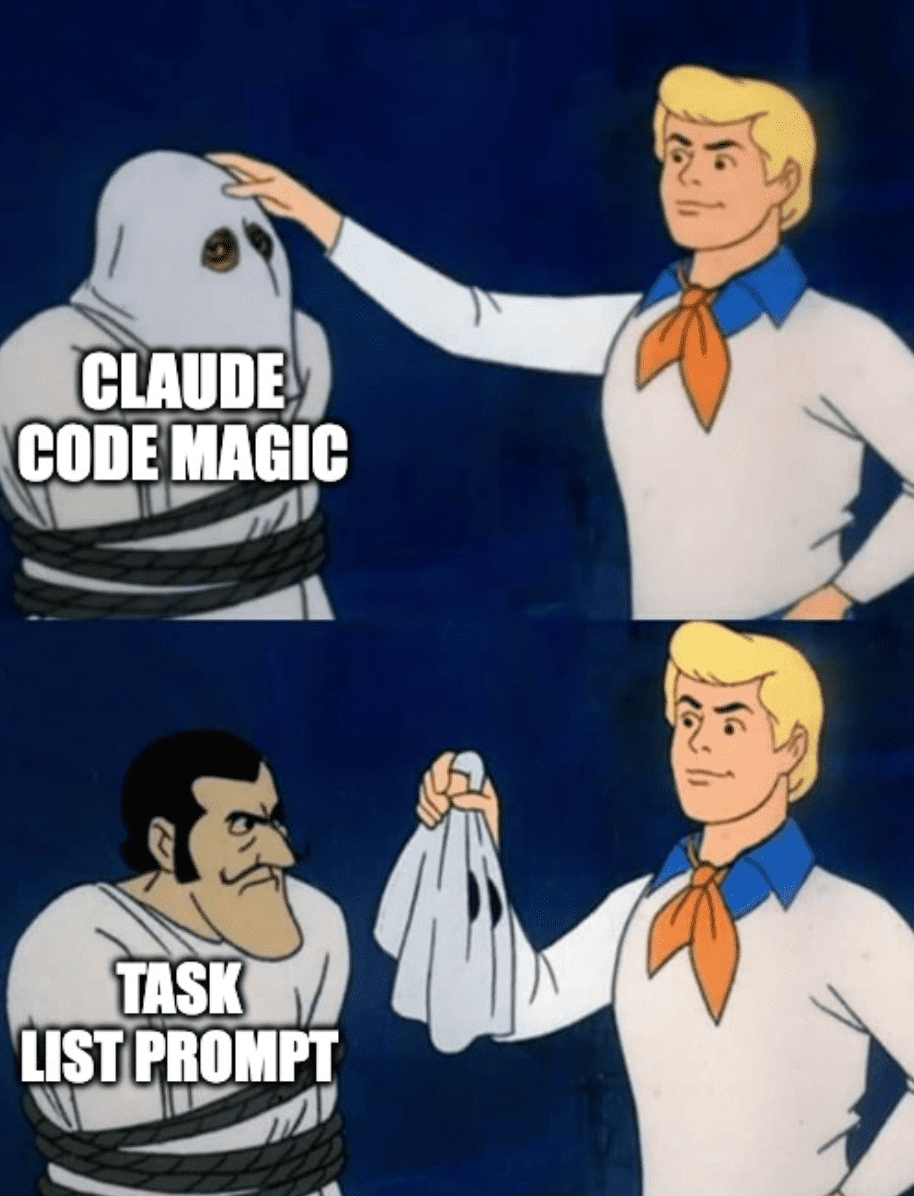

1. NEVER propose changes to code you haven't read - Read and understand existing code firstThe Task List as a Workhorse

Here’s one of Claude Code’s most elegant mechanisms for multi-part features: the internal task list.

For any relatively complex query, the agent harness breaks it down behind-the-scenes into a directed graph, describing what tasks must be completed before a given task can be tackled:

{

"role": "user",

"content": "can you migrate this implementation to typescript."

},

{

"role": "assistant",

"content": [

{

"type": "thinking",

"thinking": "The user wants me to migrate the Python FastAPI implementation to TypeScript. They also want me to use a tasklist to track the work. Let me first plan this out and create tasks.\n\nI should:\n1. Create a task list for the migration\n2. Plan the TypeScript implementation (likely using Express.js or a similar framework)\n3. Implement the backend in TypeScript\n4. Keep the same HTML templates (or convert to a TypeScript templating approach)\n\nLet me think about the tech stack:\n- Backend: Express.js with TypeScript\n- Templating: EJS or Handlebars (similar to Jinja2)\n- Storage: Keep the same JSON file approach\n\nLet me create tasks for this work.",

"signature": ""

},

{

"type": "text",

"text": "I'll create a task list and migrate the implementation to TypeScript."

},

{

"type": "tool_use",

"name": "TaskCreate",

"input": {

"subject": "Set up TypeScript project structure",

"description": "Initialize npm project, install dependencies (express, typescript, ejs, etc.), configure tsconfig.json, and create directory structure for the TypeScript web app.",

"activeForm": "Setting up TypeScript project"

}

},

{

"type": "tool_use",

"name": "TaskCreate",

"input": {

"subject": "Create TypeScript models",

"description": "Create Task and Priority types/interfaces in TypeScript, equivalent to the Python models.py. Include methods for serialization and subtask management.",

"activeForm": "Creating TypeScript models"

}

},

{

"type": "tool_use",

"name": "TaskCreate",

"input": {

"subject": "Create TypeScript storage layer",

"description": "Create TaskStorage class in TypeScript that handles JSON file persistence, equivalent to the Python storage.py. Include all CRUD operations for tasks and subtasks.",

"activeForm": "Creating storage layer"

}

},

{

"type": "tool_use",

"name": "TaskCreate",

"input": {

"subject": "Create Express.js routes and server",

"description": "Create the main Express.js application with all routes: GET /, POST /tasks, POST /tasks/:id/done, POST /tasks/:id/delete, POST /tasks/:id/subtasks, POST /subtasks/:id/done, POST /subtasks/:id/delete.",

"activeForm": "Creating Express routes"

}

},

{

"type": "tool_use",

"name": "TaskCreate",

"input": {

"subject": "Create EJS templates",

"description": "Convert the Jinja2 templates to EJS format. Create base layout and index page with task list, forms for adding tasks/subtasks, and action buttons.",

"activeForm": "Creating EJS templates"

}

},

{

"type": "tool_use",

"name": "TaskCreate",

"input": {

"subject": "Copy static assets and test the application",

"description": "Copy CSS and JS static files, build the TypeScript code, run the server, and verify all functionality works correctly.",

"activeForm": "Testing the application"

}

}

]

},

{

"role": "user",

"content": [

{

"type": "tool_result",

"content": "Task #1 created successfully: Set up TypeScript project structure"

},

{

"type": "tool_result",

"content": "Task #2 created successfully: Create TypeScript models"

},

{

"type": "tool_result",

"content": "Task #3 created successfully: Create TypeScript storage layer"

},

{

"type": "tool_result",

"content": "Task #4 created successfully: Create Express.js routes and server"

},

{

"type": "tool_result",

"content": "Task #5 created successfully: Create EJS templates"

},

{

"type": "tool_result",

"content": "Task #6 created successfully: Copy static assets and test the application"

}

]

},

{

"role": "assistant",

"content": [

{

"type": "thinking",

"thinking": "Good, tasks created. Now let me set up the dependencies - Task 2 depends on Task 1, Task 3 depends on Task 2, Task 4 depends on Tasks 2 and 3, etc. Let me set up the dependencies and start working.",

},

{

"type": "tool_use",

"name": "TaskUpdate",

"input": {

"taskId": "2",

"addBlockedBy": [

"1"

]

}

},

{

"type": "tool_use",

"name": "TaskUpdate",

"input": {

"taskId": "3",

"addBlockedBy": [

"2"

]

}

},

{

"type": "tool_use",

"name": "TaskUpdate",

"input": {

"taskId": "4",

"addBlockedBy": [

"3"

]

}

},

{

"type": "tool_use",

"name": "TaskUpdate",

"input": {

"taskId": "5",

"addBlockedBy": [

"1"

]

}

},

{

"type": "tool_use",

"name": "TaskUpdate",

"input": {

"taskId": "6",

"addBlockedBy": [

"4",

"5"

]

}

}

]

},

{

"role": "user",

"content": [

{

"type": "tool_result",

"content": "Updated task #2 blockedBy"

},

{

"type": "tool_result",

"content": "Updated task #3 blockedBy"

},

{

"type": "tool_result",

"content": "Updated task #4 blockedBy"

},

{

"type": "tool_result",

"content": "Updated task #5 blockedBy"

},

{

"type": "tool_result",

"content": "Updated task #6 blockedBy"

}

]

}The beauty of this approach is that it mimics the internal planning of engineers on multi-step features where we oftentimes must first handle tasks in a certain order based on their dependencies. In the case of agentic workflows, this is powerful because it allows Claude to intelligently spin off subagents to handle tasks that aren’t conflicting and can be done in parallel.

The tasks further are written to disk so that simultaneous Claude sessions can work on them.

Plan Mode

Outside of implicit task lists, plan mode is one of the most effective techniques you can use in Claude to develop an implementation strategy for a difficult feature and to help the agent come to a shared understanding of that strategy.

The planner is an involved prompt with a well-defined structure for the task strategy and breakdown:

# Plan Mode System Reminder

Plan mode is active. The user indicated that they do not want you to execute yet -- you MUST NOT make any edits (with the exception of the plan file mentioned below), run any non-readonly tools (including changing configs or making commits), or otherwise make any changes to the system. This supercedes any other instructions you have received.

## Plan File Info:

No plan file exists yet. You should create your plan at /Users/mihaileric/.claude/plans/expressive-greeting-pike.md using the Write tool.

You should build your plan incrementally by writing to or editing this file. NOTE that this is the only file you are allowed to edit - other than this you are only allowed to take READ-ONLY actions.

## Plan Workflow

### Phase 1: Initial Understanding

Goal: Gain a comprehensive understanding of the user's request by reading through code and asking them questions. Critical: In this phase you should only use the Explore subagent type.

1. Focus on understanding the user's request and the code associated with their request

2. Launch up to 3 Explore agents IN PARALLEL (single message, multiple tool calls) to efficiently explore the codebase.

- Use 1 agent when the task is isolated to known files, the user provided specific file paths, or you're making a small targeted change.

- Use multiple agents when: the scope is uncertain, multiple areas of the codebase are involved, or you need to understand existing patterns before planning.

- Quality over quantity - 3 agents maximum, but you should try to use the minimum number of agents necessary (usually just 1)

- If using multiple agents: Provide each agent with a specific search focus or area to explore. Example: One agent searches for existing implementations, another explores related components, a third investigating testing patterns

### Phase 2: Design

Goal: Design an implementation approach.

Launch Plan agent(s) to design the implementation based on the user's intent and your exploration results from Phase 1.

You can launch up to 1 agent(s) in parallel.

Guidelines:

- Default: Launch at least 1 Plan agent for most tasks - it helps validate your understanding and consider alternatives

- Skip agents: Only for truly trivial tasks (typo fixes, single-line changes, simple renames)

In the agent prompt:

- Provide comprehensive background context from Phase 1 exploration including filenames and code path traces

- Describe requirements and constraints

- Request a detailed implementation plan

### Phase 3: Review

Goal: Review the plan(s) from Phase 2 and ensure alignment with the user's intentions.

1. Read the critical files identified by agents to deepen your understanding

2. Ensure that the plans align with the user's original request

3. Use AskUserQuestion to clarify any remaining questions with the user

### Phase 4: Final Plan

Goal: Write your final plan to the plan file (the only file you can edit).

- Include only your recommended approach, not all alternatives

- Ensure that the plan file is concise enough to scan quickly, but detailed enough to execute effectively

- Include the paths of critical files to be modified

- Include a verification section describing how to test the changes end-to-end (run the code, use MCP tools, run tests)

### Phase 5: Call ExitPlanMode

At the very end of your turn, once you have asked the user questions and are happy with your final plan file - you should always call ExitPlanMode to indicate to the user that you are done planning.

This is critical - your turn should only end with either using the AskUserQuestion tool OR calling ExitPlanMode. Do not stop unless it's for these 2 reasons

Important: Use AskUserQuestion ONLY to clarify requirements or choose between approaches. Use ExitPlanMode to request plan approval. Do NOT ask about plan approval in any other way - no text questions, no AskUserQuestion. Phrases like "Is this plan okay?", "Should I proceed?", "How does this plan look?", "Any changes before we start?", or similar MUST use ExitPlanMode.

NOTE: At any point in time through this workflow you should feel free to ask the user questions or clarifications using the AskUserQuestion tool. Don't make large assumptions about user intent. The goal is to present a well researched plan to the user, and tie any loose ends before implementation begins.The agent is also prompted to ask any user follow-ups as necessary.

Compaction

LLMs have finite context windows. Opus 4.5’s is around 200,000 tokens. What happens when a long session threatens to exceed that limit?

Claude Code uses compaction: a mechanism to compress the conversation history while preserving essential context.

The compaction prompt is detailed:

# Conversation Summary Instructions

Your task is to create a detailed summary of the conversation so far, paying

close attention to the user's explicit requests and your previous actions.

This summary should be thorough in capturing technical details, code patterns,

and architectural decisions that would be essential for continuing development

work without losing context.The output follows a strict structure:

1. Primary Request and Intent: Capture all of the user's explicit requests

2. Key Technical Concepts: List important technologies and frameworks discussed

3. Files and Code Sections: Enumerate specific files examined, modified, or created

4. Errors and fixes: List all errors encountered and how they were resolved

5. Problem Solving: Document problems solved and ongoing troubleshooting

6. All user messages: List ALL user messages (critical for understanding intent)

7. Pending Tasks: Outline any pending tasks

8. Current Work: Describe precisely what was being worked on

9. Optional Next Step: List the next step related to current workCompaction is somewhat controversial. It takes time, and sometimes the model “forgets” important context after compacting. But it’s a necessary mechanism when you’re working on tasks that generate significant back-and-forth.

If you don’t like the built-in compaction, you can create your own slash command with custom summarization logic. The default is a reasonable starting point, but you know your codebase and workflow better than any generic prompt.

The Big Picture

Stepping back, Claude Code is fundamentally a sophisticated orchestration layer:

- System prompts establish behavioral guidelines and capabilities

- CLAUDE.md injection customizes behavior for your specific codebase

- Tool calls translate LLM reasoning into actual file operations

- Task lists provide structure and visibility for complex tasks

- Plan mode enables thoughtful design before execution

- Compaction manages context window limitations

None of these mechanisms are individually revolutionary. But composed together, they create a system that feels remarkably capable. One that reads before writing, plans before executing, and maintains context across long sessions.

Understanding these internals helps you work with the system more effectively:

- Want Claude Code to follow specific conventions? Put them in your

CLAUDE.md. They’ll get injected with high priority. - Working on something complex? Use plan mode to establish alignment before any code changes.

- Notice the model forgetting context? It might be post-compaction; consider issuing key details again.

- Seeing unexpected behavior? The model is choosing from its tool palette based on the system prompt’s guidance. Understanding that guidance helps you steer.

Now you know what’s happening under the hood.

I run a newsletter teaching 17000+ engineers state-of-the-art techniques in AI software development based on my Stanford course. Check it out here.